Ensuring your website’s pages are indexed by Google is essential for visibility and search engine ranking. However, it’s not uncommon for new content to face indexing challenges. This article delves into the seven primary reasons your pages might not be appearing in Google’s search results. From technical mishaps to content quality issues, understanding these hurdles can help streamline the indexing process and enhance your online presence. Whether you’re a seasoned webmaster or a beginner, uncovering these reasons will empower you to optimize your pages effectively, ensuring they’re accessible and prominent within Google’s vast search ecosystem.

Understanding Why Google Isn’t Indexing Your Pages

When your web pages aren’t appearing in Google search results, it can be frustrating. Indexing issues can arise from several causes and understanding these can help you take corrective actions.

1. Lack of Quality Backlinks

Backlinks are an important factor in determining your site’s authority and relevance. They signal to Google that your content is trusted by others. If your pages lack sufficient quality backlinks, they may not be prioritized for indexing. To address this, aim to build quality backlinks by engaging in outreach efforts, guest blogging, and leveraging social media.

2. Blocked by Robots.txt

Googlebot, the crawler that indexes web pages for Google search results, may be restricted if your robots.txt file instructs it not to access certain pages. Check your site’s robots.txt file to ensure that it isn’t inadvertently blocking important pages. Ensure that the directives are correctly set to allow Googlebot and other search engines to index your pages unless you purposely want certain sections hidden.

3. Noindex Tags and Meta Directives

Noindex tags in your HTML code or specific meta directives can instruct Google not to index particular pages. Sometimes, webmasters apply these settings during development and forget to alter them later. Review your pages’ source code to ensure no unwanted noindex tags are present and modify them as necessary to open those pages for indexing.

4. Duplicate Content Issues

Duplicate content can confuse search engines and lead to lower indexing priorities. It occurs when similar content exists on different pages within the same site or across different sites. Utilize canonical tags to designate the preferred version of a page and ensure your content is unique or sufficiently distinct to avoid penalties associated with duplication.

5. Low-Quality Content

Google aims to provide users with high-quality, relevant content. Pages with thin or low-value content may not be indexed if they do not meet Google’s quality standards. Improve content quality by offering detailed, valuable, and original information. Consider adding more in-depth analysis, visuals, or related resources to make your pages more attractive to search engines and users alike.

| Common Issue | Explanation | Solution |

|---|---|---|

| Lack of Backlinks | Pages without backlinks may not be deemed reliable or popular by Google. | Engage in strategic link building. |

| Robots.txt Block | The robots.txt file may block Googlebot from crawling pages. | Verify and update your robots.txt settings. |

| Noindex Tags | Noindex meta tags explicitly instruct Google not to index a page. | Review HTML code and remove noindex tags from important pages. |

| Duplicate Content | Similar content across different pages can reduce indexing priority. | Use canonical tags and ensure content uniqueness. |

| Low-Quality Content | Thin content or pages without substantial value may not be indexed. | Enhance content with more detailed and valuable information. |

Why does Google not index my pages?

Technical Issues Affecting Indexing

Technical problems can prevent Google from indexing your pages. Here are some common technical issues:

- Robots.txt Blocking: Ensure that your robots.txt file isn’t inadvertently blocking Googlebot from accessing your site. Check for any disallow directives that might impede crawling.

- Noindex Meta Tags: Verify that none of your pages have a noindex meta tag, which instructs search engines to avoid indexing a page. Accidentally adding this tag can prevent indexing.

- Sitemaps: Make sure your XML sitemap is correctly formatted and submitted to Google Search Console. An outdated or incorrect sitemap can result in pages not being indexed.

Content Quality and Uniqueness

The quality of your content strongly influences indexing and ranking. Google favors sites with valuable, unique content.

- Thin Content: Pages with sparse or minimal content may not be indexed. Ensure each page provides comprehensive information on its topic.

- Duplicate Content: If your site features content duplicated from other websites, Google might skip indexing. Providing unique content is crucial.

- Low-Quality Content: Enhance the value of your content by making it informative and engaging, thereby encouraging indexing.

Website Authority and Trust

Google considers the overall authority and trustworthiness of your site in its indexing decisions.

- New Websites: If your website is new, Google might take longer to index as it builds trust. It requires consistent updates and a growing backlink profile.

- Low Domain Authority: Low authority sites might struggle with indexing. Building quality backlinks is essential to increase domain authority.

- Spammy Behavior: Any practices perceived as spammy, such as excessive keyword stuffing, can hurt indexing possibilities. Focus on genuine and ethical SEO practices.

How do I get Google to index my pages?

To get Google to index your pages, you need to ensure that your website is accessible, has quality content, and is properly optimized for search engines. Below are detailed explanations and steps to help you in this process.

Ensure Your Website is Accessible

For Google to index your pages, the search engine’s crawlers need to access your site easily. Here are steps to ensure accessibility:

- Create a Sitemap: Generate an XML sitemap to list all your website’s pages. Submit this sitemap to Google Search Console to make it easier for Google to discover all the pages you want indexed.

- Check for Robots.txt File: Make sure your robots.txt file isn’t blocking Googlebot from accessing specific sections of your site. This file should be configured to allow Googlebot to crawl all necessary pages.

- Verify Website Loading Speed: Ensure that your site loads quickly. Slow loading times can cause Googlebot to halt the indexing process, so optimize your images, use caching, and consider a content delivery network (CDN) if necessary.

Optimize Your Content for Search Engines

Optimizing your content effectively can expedite the indexing process and improve your visibility. Implement the following practices:

- Use Relevant Keywords: Include targeted keywords naturally within your content, meta descriptions, and alt tags. This helps Google understand the context and relevance of your pages.

- Write Unique and High-quality Content: Ensure that your content is informative, well-written, and unique. Duplicate content can lead to pages being ignored by Google, impacting your site’s ranking.

- Optimize Meta Tags: Provide concise and descriptive meta titles and descriptions. These elements help Google understand the topic of your content and encourage users to click on your link in search results.

Utilize Google Search Console Effectively

Google Search Console is a powerful tool to help manage your website’s presence in Google search results. Use it by following these steps:

- Submit Sitemaps: Use the Search Console to submit your XML sitemap, ensuring it includes all active pages you want indexed by Google.

- Request URL Indexing: If you have new or updated content, you can use the URL inspection tool in Google Search Console to request indexing, speeding up the discovery process.

- Monitor Crawling Issues: Regularly review the Crawl Stats and Coverage reports in Google Search Console to identify any crawling or indexing issues. Address these issues promptly to maintain optimal visibility.

Why is Google deindexing my pages?

Common Reasons for Deindexing

Pages can be deindexed from Google for several reasons, often related to violations of Google’s guidelines or issues that negatively impact the user experience.

- Low-Quality Content: If your pages have thin content, such as duplicate or automatically generated content that provides little value, Google might deindex them to maintain the quality of its search results. Ensure your content is unique and provides genuine value to users.

- Technical Issues: Problems like incorrect use of the robots.txt file or improper noindex meta tags can unintentionally block Google from indexing your pages. Review your site’s technical settings to ensure they are configured correctly for search engines to crawl and index your pages.

- Violations of Google’s Webmaster Guidelines: Engaging in practices like cloaking, using aggressive link schemes, or other black-hat SEO tactics can lead to Google penalizing and deindexing your pages. Adhere to Google’s guidelines to avoid such penalties.

How to Diagnose Deindexing Problems

Diagnosing why Google may have deindexed your pages requires a methodical approach to identifying potential issues.

- Use Google Search Console: This tool is invaluable for diagnosing indexing issues. It can provide notifications about penalties or issues related to your site’s indexing status, such as crawl errors or manual actions.

- Check for URL Errors: Use Google Search Console or other webmaster tools to review if there are crawl errors or URL-specific issues that might prevent indexing.

- Review Technical SEO Settings: Conduct a thorough audit of your site’s SEO settings. Check for incorrect noindex tags, errors in robots.txt, and other technical settings that might affect indexing.

Solutions to Prevent Deindexing

Taking proactive measures can help ensure that your pages are properly indexed by Google and visible in search results.

- Improve Content Quality: Regularly update and enhance your content to ensure it remains valuable and relevant. Focus on providing unique insights, research, and detailed information.

- Regular Site Audits: Conduct frequent audits of your site to catch any technical SEO issues early. Tools like Screaming Frog or SEMrush can help automate these audits.

- Stay Compliant: Always stay updated with Google’s Webmaster Guidelines. Engage in ethical SEO practices and avoid manipulative tactics that could lead to penalties.

How to solve indexing issues?

Understanding Indexing Issues

Indexing issues refer to the problems encountered when a website or webpage is not properly indexed by search engines, keeping it from appearing in search results. This can be due to a variety of reasons, such as technical errors, content issues, or site structure problems. To solve indexing issues, it is crucial to first understand the root cause.

- Improve Website Structure: Having a well-organized site structure is key to ensuring search engines can crawl and index your pages effectively. Use a logical hierarchy, with a clear distinction between categories, subcategories, and individual pages.

- Fix Sitemap and Robots.txt Files: Ensure that your XML sitemap is up-to-date and correctly submitted to search engines. The robots.txt file should not block critical resources and should allow search engine bots to crawl your important pages.

- Resolve Duplicate Content: Duplicate content can confuse search engines and lead to indexing issues. Use canonical tags to indicate the preferred version of a page to search engines and remove or merge duplicate content wherever possible.

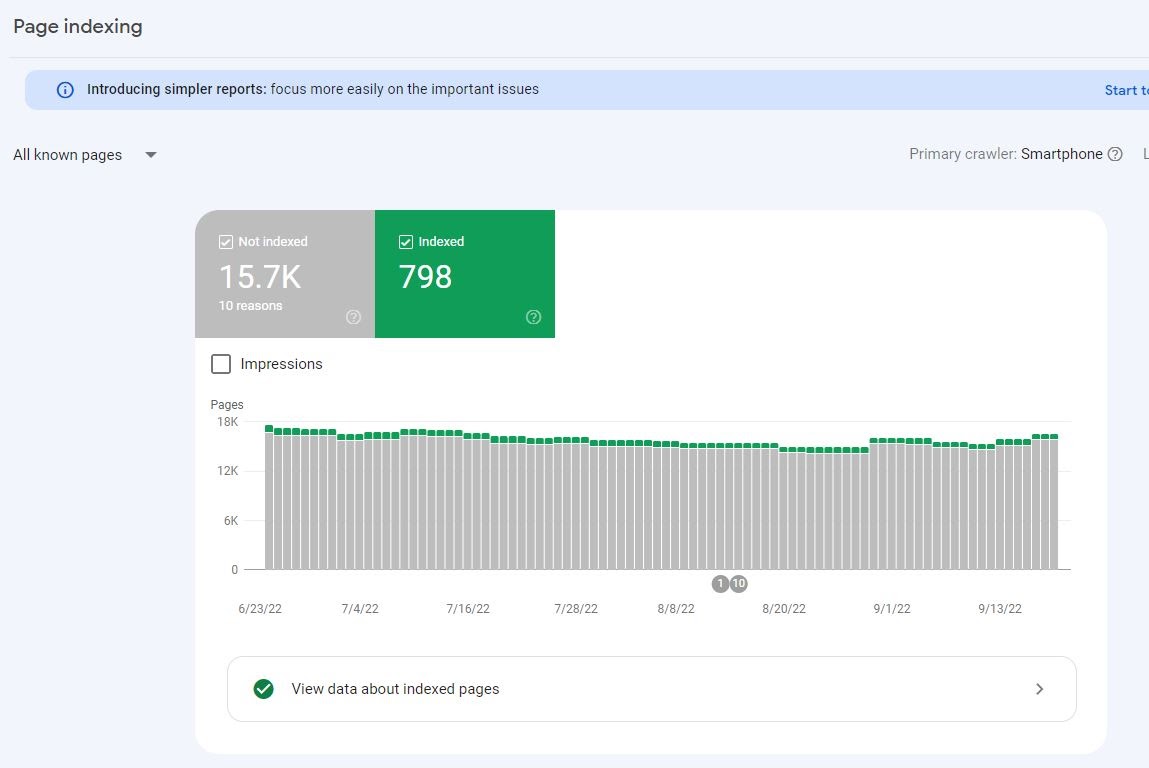

Using Google Search Console to Diagnose Problems

Google Search Console offers valuable insights into how Google indexes a site and can help identify indexing issues. By utilizing its features, one can tackle them efficiently.

- Coverage Report: The Coverage report shows the status of all pages Google has attempted to index, including errors and excluded pages. Regularly check this report to understand which pages have indexing issues and why.

- URL Inspection Tool: This tool allows you to analyze specific URLs to see if they are indexed, identify any issues, and request re-indexing if needed. Use it to troubleshoot and ensure critical pages are appropriately indexed.

- Enhancements and Mobile Usability: Regularly monitor these sections for any detected issues that might affect indexing. Fixing mobile usability issues, for instance, can improve accessibility and indexing chances, especially since mobile-first indexing is prevalent.

Best Practices for Content and SEO

Content quality and SEO practices significantly influence indexing. By adhering to best practices, you can prevent and solve indexing problems more effectively.

- Ensure Quality Content: Content should be original, high-quality, and relevant to your audience. Poor-quality content is less likely to be indexed favorably by search engines.

- Optimize Meta Tags: Use clear, descriptive title tags and meta descriptions. These not only help in SEO but also assist search engines in understanding the content of each page.

- Utilize Internal Linking: Effective internal linking aids in improving site navigation for both users and search engines, helping search engines discover new content and establish the hierarchy of your site.

Frequently Asked Questions

What is the importance of having pages indexed by Google?

Having your pages indexed by Google is crucial for ensuring they are visible in search results. If your pages are not indexed, they will not appear when users search for related keywords, leading to a loss of potential traffic and opportunities for engagement and conversions. Indexing also plays a role in establishing the authority and relevance of your website, impacting your overall SEO strategy and performance.

What are common reasons pages may not be indexed?

Several factors can prevent pages from being indexed, including technical errors such as incorrect robots.txt settings or noindex tags. Other issues might involve duplicate content, poor quality or thin content, and insufficient backlinks to the specific pages. Additionally, crawling issues, where Google’s bots cannot access a page due to structural site errors or slow loading speeds, can also play a significant role.

How can you ensure your pages get indexed by Google?

To improve the indexing of your pages, ensure your website structure is clean and all important pages are linked from the homepage or major category pages. Use search engine-friendly URLs and avoid using duplicate content. Regularly submit an updated XML sitemap to Google Search Console, and check for any crawling errors through this platform. High-quality, unique content and acquiring quality backlinks will also enhance your page’s likelihood of being indexed.

What role does content play in page indexing?

Content quality is a significant factor in determining whether a page is indexed. Pages with high-quality, relevant, and informative content are more likely to be indexed as Google aims to promote pages that will fulfill user queries effectively. Thin or duplicate content tends to be neglected because it doesn’t provide significant value. Thus, it’s critical to focus on creating original, authoritative content that engages users and provides them with what they need, which in turn signals to Google that your page deserves to be indexed.