In today’s digital landscape, ensuring every page of your website is indexed by Google is crucial for maximizing visibility and search engine traffic. As Google continues to evolve its algorithms, website owners must stay informed and proactive in their approach to SEO. Proper indexing not only impacts your site’s discoverability but also affects its credibility and ranking. This article will guide you through essential steps and best practices to guarantee that Google indexes every page of your website. By understanding the basics of SEO and leveraging key tools, you can enhance your site’s presence and performance in search results.

Effective Strategies to Ensure Every Page of Your Site is Indexed by Google

To guarantee that every page of your website is indexed by Google, there are specific strategies and practices you should implement. These strategies help in enhancing the visibility of your content in the search engine results and bring organic traffic to your website.

Optimize Your XML Sitemap for Better Indexing

An XML Sitemap is essentially a map that provides search engines with a roadmap of your website’s structure. It is crucial because it makes it easier for search engines to navigate and index your site. Ensure that your XML Sitemap is up-to-date, and includes all of your site’s crucial URLs. Submit this sitemap to Google via Google Search Console to notify Google of the pages you want to be crawled and indexed. Regularly updating your sitemap and resubmitting when significant changes occur on your website is a recommended practice.

Ensure Mobile-Friendliness of Your Website

Google has a mobile-first indexing approach, meaning it predominantly uses the mobile version of a webpage for indexing and ranking. To ensure that your pages are indexed, they must be optimized for mobile devices. This includes having a responsive design, fast load times, and fully functional content on both desktop and mobile platforms. Use tools such as Google’s Mobile-Friendly Test to check your site’s mobile compatibility and make any necessary adjustments.

Boost Page Load Speed for Efficient Crawling

The speed at which your pages load can significantly affect the frequency and efficiency of Googlebot’s crawling of your site. Faster-loading pages tend to be crawled more frequently. Utilize tools like Google PageSpeed Insights to analyze your site’s speed and get suggestions for improvement. Common recommendations include compressing images, reducing server response time, and leveraging browser caching to improve performance.

Use Internal Linking to Facilitate Indexing

Internal links are vital because they connect your site’s content, improve navigation, and distribute page authority and ranking power. These links allow Google to identify and access all the different parts of your website more efficiently. Ensure that every page has some links pointing to it, not only from the main navigation menu but also organically within the content. This linking strategy helps in ensuring that none of your pages are orphaned and go unnoticed by the crawlers.

Avoid Duplicate Content for Consistent Indexing

Duplicate content can confuse search engines and result in some pages not being indexed. Implement canonical tags to indicate the preferred version of a webpage to Google, which helps in resolving duplication issues. Also, periodically audit your content with tools like Copyscape to ensure originality. If you have similar content, redirect less relevant pages to the main version using 301 redirects to conserve indexing preferences.

| Strategy | Action Steps |

|---|---|

| XML Sitemap Optimization | Update and submit sitemap to Google Search Console regularly. |

| Ensure Mobile-Friendliness | Check using Google’s Mobile-Friendly Test and optimize accordingly. |

| Page Load Speed | Analyze with Google PageSpeed Insights and implement speed-enhancing suggestions. |

| Internal Linking | Integrate a robust internal linking strategy to connect all site pages. |

| Avoid Duplicate Content | Use canonical tags, audit with Copyscape, and implement 301 redirects as needed. |

How do I get Google to index all my pages?

To get Google to index all your pages, there are several key steps you can take to ensure your website is properly crawled and indexed by Google’s bots. Here’s a detailed guide with structured steps.

Submit a Sitemap to Google Search Console

Submitting a sitemap is a fundamental action to help Google discover your pages:

- Create an XML Sitemap: Use a plugin or online tool to generate a sitemap of your website, listing all relevant pages for indexing.

- Submit to Google Search Console: Navigate to the “Sitemaps” section in Google Search Console and submit your sitemap URL.

- Monitor for Errors: Regularly check the Search Console for any crawl errors or issues with the sitemap submission.

Optimize Individual Pages for Crawling

Optimizing your pages ensures they’re easier for Google to read and index effectively:

- Use Meta Tags: Insert relevant meta titles and descriptions for each page to provide concise information about the content.

- Improve Internal Linking: Make sure that each page is linked from other pages within your site to enhance discoverability.

- Ensure Mobile-Friendliness: Use responsive design to make your site more accessible to Google’s mobile-first indexing.

Use Fetch as Google Tool in Search Console

This tool allows you to request that Google crawls specific pages:

- Access the Tool: Go to “URL Inspection” in Google Search Console and enter the URL you wish to have crawled and indexed.

- Request Indexing: After fetching the URL, choose the option to request indexing for the particular page.

- Watch Request Status: Monitor the status to ensure that the page has been successfully crawled and indexed.

Why Google doesn't index all my pages?

Reasons Your Pages Might Not Be Indexed by Google

There are numerous factors why Google might not index all your webpages. Addressing these issues may improve your website’s indexing:

- Noindex Meta Tags: If your pages have noindex tags in their HTML, Google’s bots won’t index them. Check your site’s HTML or CMS settings to ensure that these tags are not being used unless intentionally.

- Poor Quality Content: Google values high-quality content. If pages have thin or duplicated content, they might not be indexed. Ensure your pages offer unique and valuable information to users.

- Technical Errors: Problems like server errors or incorrect DNS settings can prevent crawling and indexing. Regularly monitor your site health through tools like Google Search Console to identify such issues.

Technical Inspections You Should Perform

Conducting routine technical checks can help identify barriers to indexing and help in rectification:

- Check Robots.txt File: Ensure that the robots.txt file is not blocking access to the pages you want indexed. A disallow directive can prevent the Googlebot from accessing specific parts of your site.

- Inspect XML Sitemap: Submit an XML sitemap to Google Search Console and ensure it accurately lists all the important pages. A well-structured sitemap helps Google prioritize which URLsto index.

- Resolve Crawl Errors: Use Google Search Console to identify crawl errors. Fix issues like 404 errors or redirect errors to facilitate smooth indexing.

Content and Structure Optimization

Optimizing the content and structure of your site can enhance your chances of proper indexing:

- Enhance Internal Linking: An appropriate internal linking structure encourages Google to discover and index more pages by following the connections within your site.

- Improve Page Load Speed: Fast loading pages are prioritized by Google. Slow pages may not be indexed immediately. Use tools like Google PageSpeed Insights to improve speed.

- Ensure Mobile-Friendliness: Make sure your site is mobile-friendly. As Google uses mobile-first indexing, pages that don’t render well on mobile devices might not be indexed.

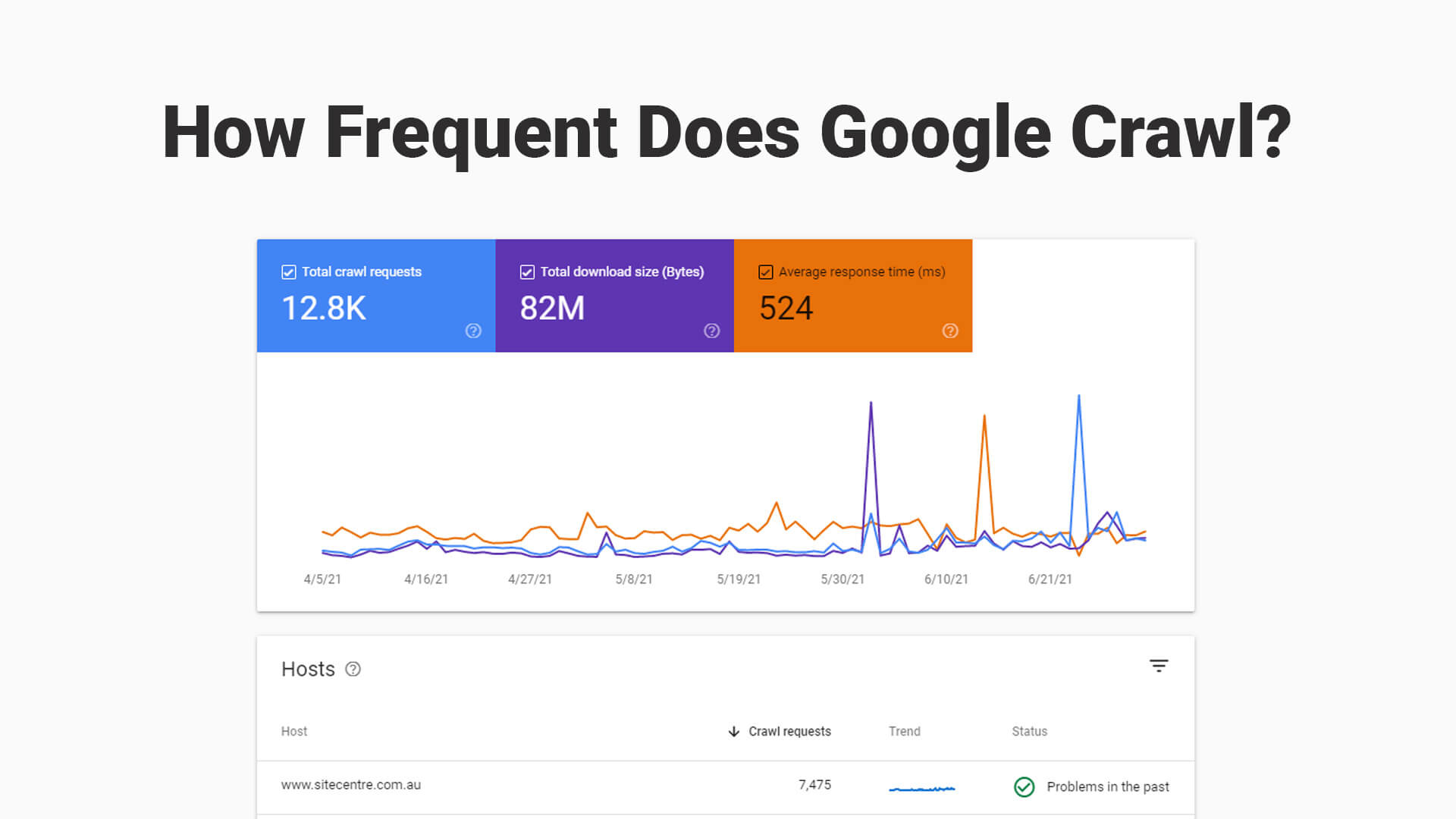

How often does Google index my site?

The frequency at which Google indexes a site can vary widely and is influenced by several factors. Googlebot, Google’s web crawling bot, determines how often to crawl each website based on factors such as the website’s update frequency, overall quality, and necessity. Websites that change often, like news sites, may get crawled more frequently than static sites. Here are some factors that contribute to how often Google might index your site:

Factors Influencing Google Indexing Frequency

1. Content Freshness: Websites that regularly update their content tend to be indexed more frequently as Google aims to index the latest information available.

2. PageRank and Authority: Pages with higher authority and more inbound links may also be indexed more often, as Google deems them as important pages.

3. Server Performance: Faster, more reliable servers can handle more frequent crawls from Google’s bots, affecting how often indexing happens.

How to Monitor and Improve Your Indexing Frequency

1. Use Google Search Console: This tool can show you how often Google is accessing your site and can even allow you to request indexing for specific URLs.

2. Submit a Sitemap: Providing a sitemap can help Google know which pages to prioritize and can assist in faster indexing of new or updated content.

3. Check Crawl Errors: Resolve any crawl errors identified by Google, as these could be preventing Google from accessing certain pages and affecting indexing frequency.

Common Myths About Google Indexing Frequency

1. Automatic Daily Indexing: Not all websites are indexed daily. Even active sites may only be fully recrawled every few days or weeks, depending on various factors.

2. All Pages Treated Equally: Not all pages on a website have the same crawl priority; important and frequently visited pages might be indexed more often than less important ones.

3. Paid Services for Faster Indexing: Paying services to claim faster indexing is often a myth. Google’s algorithms determine indexing frequency, and payment to third parties won’t directly affect this timing.

Frequently Asked Questions

What are the initial steps to ensure Google indexes every page of my site?

To ensure that every page of your site is indexed by Google, the initial steps involve setting up a Google Search Console account. This powerful tool helps track the performance of your website and identify indexing issues. Begin by submitting a sitemap, which acts as a roadmap for Googlebot to understand the structure of your site. Regularly update and submit this sitemap whenever new pages are added. Additionally, check for and rectify any crawl errors that appear, as these can impede Googlebot from accessing certain pages. Providing an updated file helps Google to efficiently navigate, index, and update your website’s pages.

How can I optimize my website’s structure for better indexing?

Optimizing your website’s structure is crucial for effective indexing by search engines. Start by ensuring your site has a clean and organized hierarchy, where important pages are not buried deep within subpages. Utilize internal linking efficiently to connect different parts of your website, which helps Google discover pages that might not receive external links. Another key factor is making sure URLs are descriptive yet concise, reflecting the content a page holds. Implementing a mobile-friendly design is also pivotal, given the shift to mobile-first indexing by Google. Last but not least, ensure that your site’s load speeds are optimized; slow load times can hinder Google’s crawling process.

What role does content quality play in page indexing, and how can I enhance it?

Content quality directly impacts how Google indexes your pages. High-quality, original content ensures that your pages are deemed valuable and trustworthy by Google’s algorithm. Avoid duplicate content as it can lead to indexing issues and penalties. To enhance content quality, focus on creating engaging and in-depth articles that answer user intents. Incorporate keywords strategically across titles, headers, and within the content, but avoid keyword stuffing which can be detrimental. Additionally, regularly updating your pages with fresh, relevant content signals Google that the site is active and worth indexing. Including multimedia elements like images and videos can also enrich the user experience, boosting engagement metrics that Google tracks.

How does technical SEO influence the indexing of my site’s pages?

Technical SEO plays a fundamental role in ensuring efficient indexing of your site’s pages. Key technical aspects include optimizing your robots.txt file to guide search engine crawlers, allowing or disallowing access to certain parts of your site as needed. Implement canonical tags to prevent duplicate content issues by indicating the preferred version of a webpage. Ensure all your web pages have appropriate meta tags, particularly the meta title and description, as these elements help search engines understand the content and purpose of each page. Moreover, ensure your site is secure with HTTPS, as security is a ranking factor. Fixing broken links and ensuring your site is free from 404 errors also aids in maintaining a smooth and effective crawling and indexing process.