URL Inspection errors can be a recurring challenge for web developers and SEO specialists working to maintain a website’s performance and visibility. Understanding how to effectively troubleshoot these issues is crucial for ensuring that search engines like Google can accurately crawl and index your site, thereby improving its discoverability. This article delves into the common causes of URL Inspection errors, offering step-by-step guidance on diagnosing issues and implementing corrective measures. Whether you’re dealing with indexing problems, crawl anomalies, or HTTPS errors, our comprehensive approach aims to equip you with the knowledge and tools needed for efficient troubleshooting.

How to Troubleshoot URL Inspection Errors

When working with Google’s URL Inspection tool, encountering errors can be a common issue. Understanding how to troubleshoot these errors can improve your website’s performance and search visibility. Below are some essential steps and information for addressing these issues.

Understanding URL Inspection Errors

URL Inspection errors can occur for numerous reasons, primarily related to indexing and crawlability. The tool reports various types of errors such as pages not being indexed, blocked by robots.txt, or containing noindex tags. Understanding the nature of these errors is crucial for effective troubleshooting.

Analyzing the Index Coverage Report

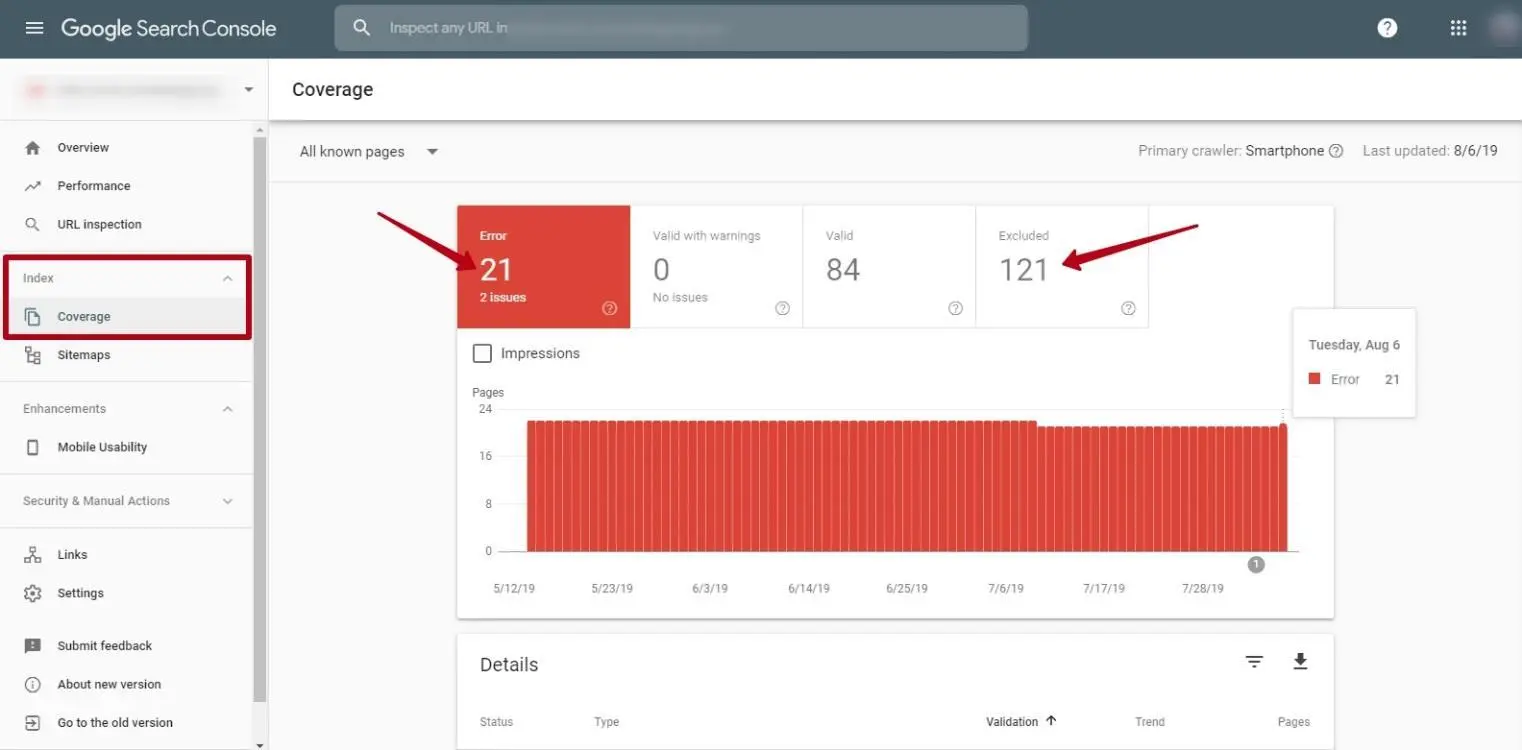

The Index Coverage report in Google Search Console provides detailed insights into all URLs Google tried to index. It classifies them into different statuses: Error, Valid with Warnings, Valid, and Excluded. For troubleshooting: 1. Navigate to the Index Coverage report. 2. Identify URLs with the Error status. 3. Inspect the specific reason for each error, such as server errors (5xx) or redirect errors.

Identifying and Resolving Crawl Errors

Crawl errors are often due to issues like 404 Not Found or DNS issues: – For 404 Not Found, check for broken links and either update them or use 301 redirects to redirect to a valid URL. – For DNS issues, ensure the domain is correctly configured and accessible.

Understanding Blocked Resources

Blocked resources can prevent Google from rendering your pages properly. In the URL Inspection tool, check for any resources that might be blocked by robots.txt. – Open your robots.txt file and verify the rules. – Unblock essential resources such as CSS and JS files that help with rendering.

Using the URL Inspection Tool Effectively

To use the URL Inspection tool effectively: – Enter the URL you want to inspect and see its current status. – Look for details under Coverage and Enhancements to identify issues. – Use the Test Live URL feature to see if the issue is still persistent after your fixes. – After making corrections, use the Request Indexing option to prompt Google to recrawl the URL.

| Error Type | Description | Solution |

|---|---|---|

| 404 Not Found | Page is missing or the link is broken. | Set up a 301 redirect or update the link. |

| Server Errors (5xx) | Site is overloaded or down. | Check server logs and resource limits. |

| Blocked by robots.txt | URL is blocked for crawling. | Modify the robots.txt file and test. |

| Redirect Errors | Issues with URL redirection. | Ensure redirects are properly set up as 301 redirects. |

How to troubleshoot a URL issue?

Identify the Issue with the URL

First and foremost, it is crucial to ascertain what exactly is wrong with the URL. Here’s a detailed list of steps to help you in identifying the problem:

- Check Syntax: Ensure the URL is correctly formatted with the appropriate scheme (http:// or https://), hostname, and path.

- Verify Domain: Confirm that the domain name is spelled correctly and the top-level domain (.com, .net, etc.) is appropriate.

- Examine Parameters: If there are query parameters present, check for any syntax issues or missing/extra characters like ‘&’ or ‘=’.

Analyze Server Response Codes

Understanding the server response codes can help determine the root cause of a URL issue, as they provide clear indications of what went wrong.

- 200 – OK: The URL is working fine and you should see the content it leads to.

- 404 – Not Found: This signifies that the server can’t find the requested resource; check for errors in the URL path or file name.

- 500 – Server Error: This indicates a problem with the server, not the URL itself; consider contacting the server administrator for more details.

Test the URL in Different Environments

Testing the URL across various environments can help pinpoint whether the problem is local or widespread.

- Different Browsers: Test the URL in multiple web browsers to see if the issue is browser-specific.

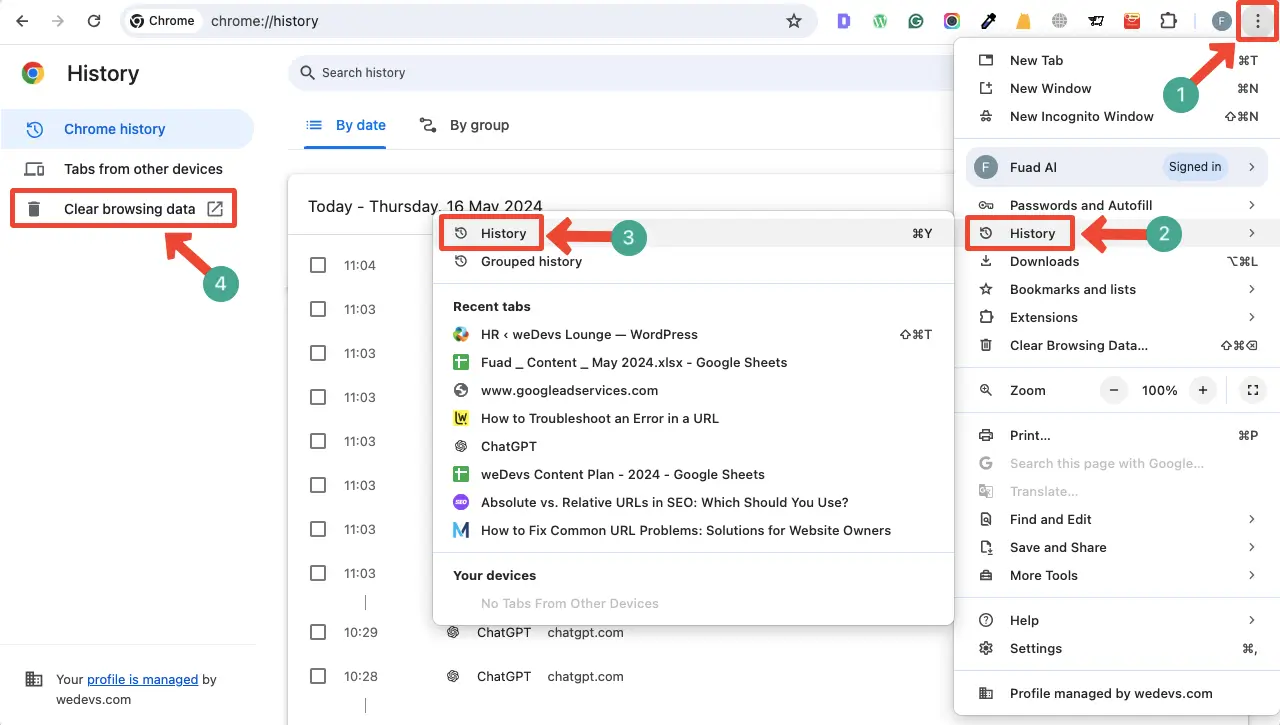

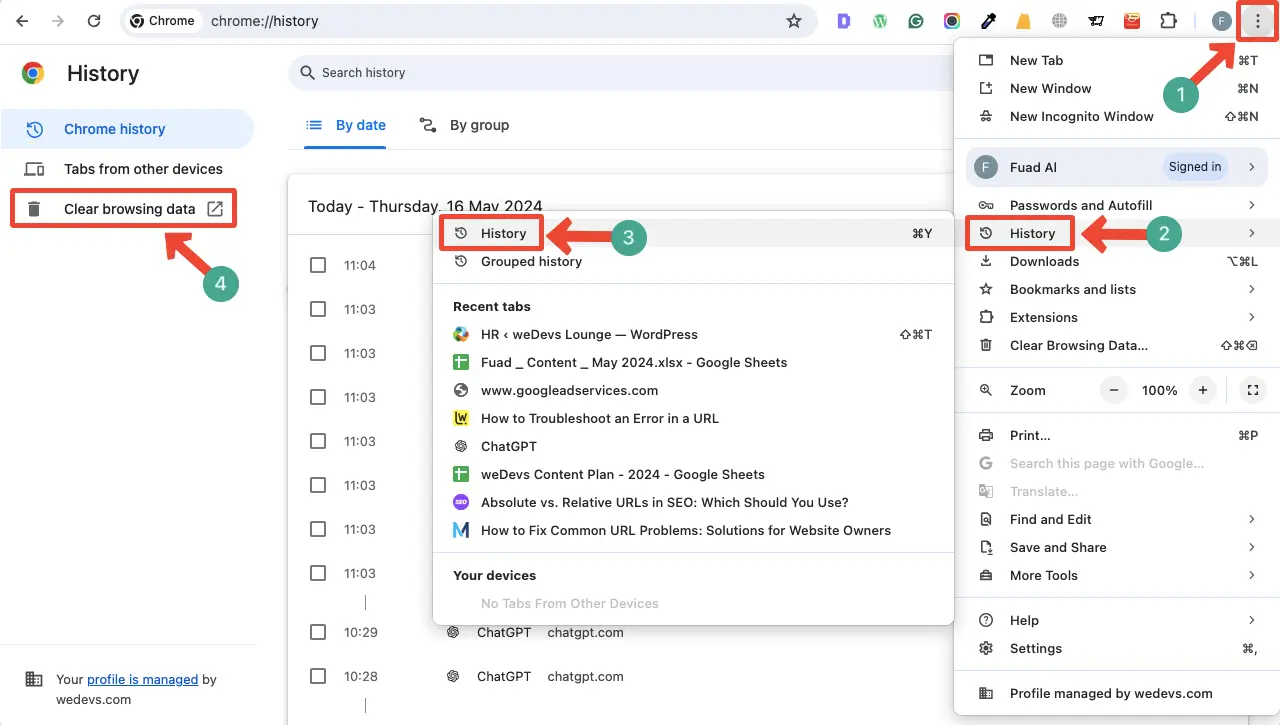

- Clear Cache: Clear the browser cache and cookies, as cached information might cause issues with loading the URL correctly.

- Network Connections: Try accessing the URL from different networks (Wi-Fi, mobile data) to rule out network-related problems.

How to check URL for errors?

Understanding Common URL Errors

To effectively check a URL for errors, understanding the most common issues is the first step. Here’s a detailed look at frequent URL problems:

- Typographical Errors: Typos in a URL, such as missing characters or incorrect punctuation, can lead to broken links.

- Invalid Characters: URLs must use valid characters; spaces and certain symbols are not allowed.

- Case Sensitivity: URLs are case-sensitive, especially in UNIX based servers, so mismatched cases can cause errors.

Utilizing Online URL Validators

Online tools can effectively identify errors in URLs. These platforms often provide comprehensive diagnostics:

- Accessibility Analysis: They check whether the URL is accessible and returns a valid HTTP status code like 200 OK.

- SSL Verification: The tool verifies if the URL is using a valid SSL certificate, essential for secure connections.

- Redirects Check: It identifies if the URL improperly redirects or encounters loops that prevent access.

Manual URL Inspection Techniques

Conducting a manual inspection of URLs can reveal details that automated tools might overlook:

- Path Integrity: Ensure the URL path is correct, matching the directory and file structure precisely.

- Parameter Encoding: Confirm that any query parameters are correctly encoded, avoiding accidental data misinterpretation.

- Domain Verification: Verify the domain is accurate and resolve hostname issues, particularly in a networked environment.

How to fix crawl errors in Google Search Console?

Understanding Crawl Errors in Google Search Console

Before fixing crawl errors, it’s important to understand what they mean. Crawl errors occur when Googlebot tries to access a page on your website but fails. These errors can prevent your site from being properly indexed by search engines.

- DNS Errors: These occur when the DNS server does not properly communicate with the server hosting your website. Ensure that your DNS settings are correctly configured.

- Server Errors: This indicates that Googlebot received a server error when attempting to access your site. Check your server’s availability and performance.

- 404 Not Found Errors: These occur when a page is not found on your site. Ensure all internal links point to the correct URLs and create a custom 404 page to guide visitors.

How to Address Crawl Errors

Once you understand the types of crawl errors, follow these steps to address and resolve them effectively:

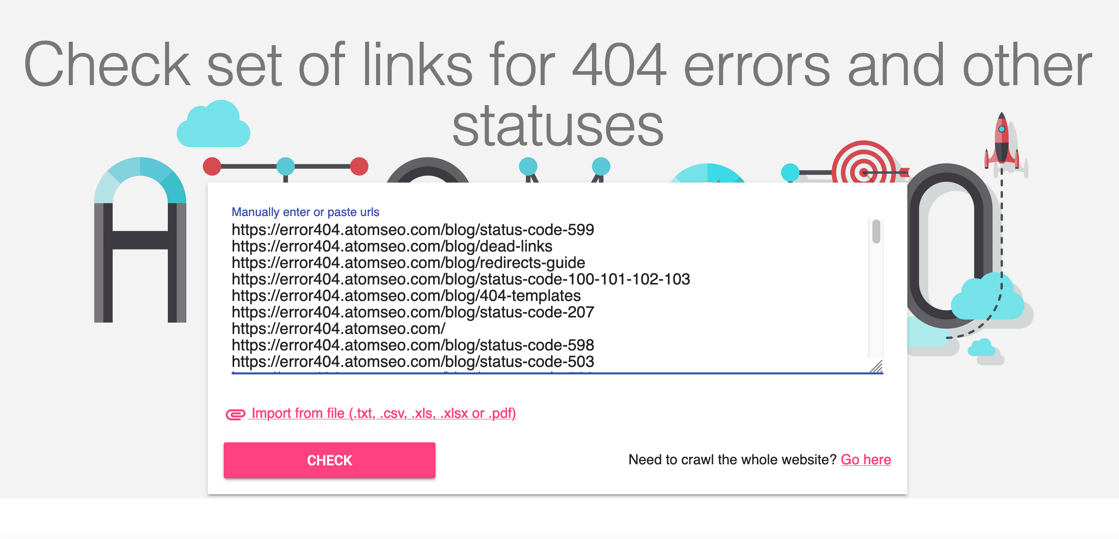

- Fix Broken Links: Use a tool to identify broken links on your site and update or remove them to ensure all links direct to existing pages.

- Submit a Sitemap: Submit an updated sitemap to Google Search Console to ensure all pages are indexed correctly.

- Monitor Regularly: Regularly check your Google Search Console for new or recurring crawl errors to keep track of your site’s health.

Preventing Future Crawl Errors

Preventing crawl errors is more effective than fixing them after they occur. Here are strategies to prevent them:

- Use 301 Redirects: When you move or delete a page, set up 301 redirects from the old URL to the new one to maintain link equity and guide users correctly.

- Optimize Server Performance: Ensure your server can handle the traffic load and is properly configured to prevent server errors during Googlebot crawls.

- Regularly Update Content: Remove outdated pages and regularly update content to reduce the risk of 404 errors and improve site navigation.

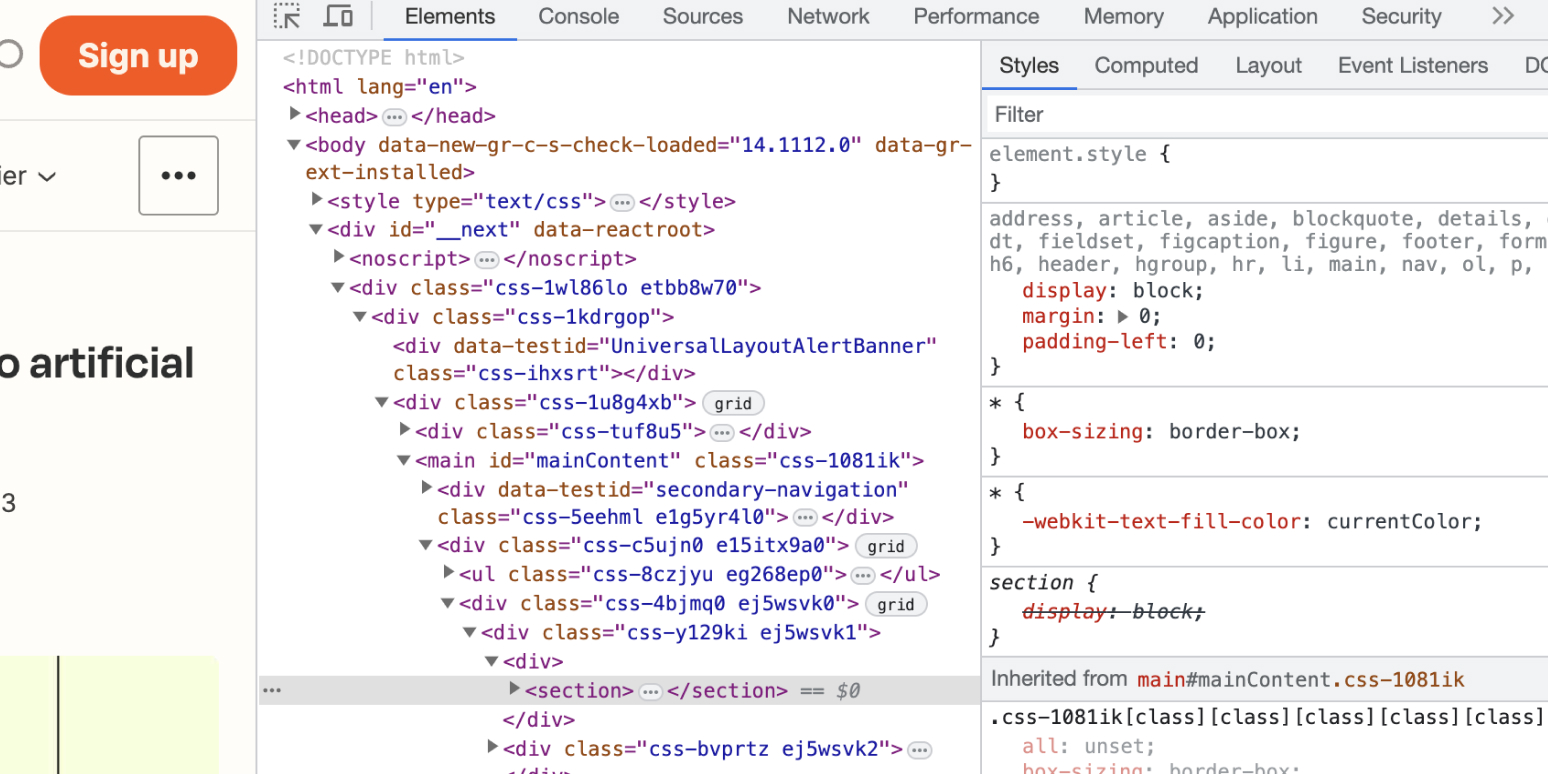

How to inspect element URL?

To inspect an element’s URL, you can use web development tools baked into most modern browsers. These tools allow you to see the HTML structure and the URLs linked within a page. Here’s how you can do it and understand the underlying concepts.

Steps to Inspect an Element URL Using Developer Tools

To successfully inspect an element’s URL, follow these steps using browser developer tools:

- Open Developer Tools: Right-click on a webpage and select Inspect (or Inspect Element depending on the browser) to open Developer Tools.

- Element Selection: Use the inspection tool to hover or click on the desired element. Look for an element like an anchor (``) tag if you’re trying to find a hyperlink URL.

- View the URL: In the HTML structure, identify the highlighted `` tag. The URL of the anchor will be found in the `href` attribute, revealing the link destination.

Understanding HTML Structure for URLs

Comprehending how URLs are embedded in HTML is essential to navigating and tracing their paths:

- Anchor Tags: Commonly used to embed URLs in HTML, represented by ``. Here, the `href` attribute holds the actual URL.

- Image Sources: For image URLs, look for the `src` attribute within `

` tags which specifies the image file location.

- Scripts and Styles: External scripts and styles might have URLs in the `src` attribute within “ tags or `href` in “ tags used for stylesheets.

Tips for URL Inspection and Troubleshooting

With precise navigation and problem identification in hand, here are some additional tips:

- Check Permissions: Ensure you have the necessary permissions to view linked URLs, particularly if content is restricted or secured.

- Look for JavaScript Impact: Dynamic JavaScript can modify URLs after initial page load; inspect the Console for script actions.

- Mobile Emulation: Switch to mobile device view in Developer Tools if inspecting URLs that behave differently on mobile interfaces.

Frequently Asked Questions

What are common errors encountered during URL inspection?

During a URL inspection, common errors may include issues such as crawlability, indexability, and server errors. Crawlability issues mean the search engine bots are unable to access the webpage due to factors like a disallow directive in the robots.txt file or a poor server response. Indexability issues occur when a page is blocked from being indexed by a noindex meta tag or canonical issues that lead search engines to prefer different URLs. Server errors such as a 500 Internal Server Error or 503 Service Unavailable can also prevent a successful URL inspection as they indicate problems with the server hosting the website, making it crucial to address these server-side problems promptly.

How can you resolve crawlability issues?

To troubleshoot crawlability issues, first review the robots.txt file to ensure no critical areas of your site are mistakenly blocked. Use the URL Inspection Tool to check for crawl errors, then monitor server logs for instances of blocked requests. Ensuring that your server is configured to return 200 status codes for desired pages and that there are no misconfigured firewall rules hindering the bot’s access is essential. Additionally, regularly check your website for broken internal links and fix them to maintain seamless navigation for both users and bots.

What strategies can help improve indexability?

Improving indexability begins with ensuring there is no unintentional use of the noindex directive on important pages. It’s also crucial to maintain a clean URL structure and utilize sitemaps, submitting them to search engines like Google to guide crawlers efficiently. Regularly performing a site audit helps identify potential indexation issues such as duplicate content or incorrect canonical tags that might steer bots away from your preferred URLs. Updating the robots.txt to permit essential pages and employing structured data can enhance the visibility and indexability of your content.

How can server errors affecting URL inspection be addressed?

Addressing server errors starts with identifying the root cause of the issue—be it overload, configuration errors, or downtime. Ensure that your server has sufficient resources to handle traffic or consider upgrading your hosting plan if necessary. Regularly update your server software to patch any vulnerabilities that could lead to configuration issues. Implement monitoring tools to alert you of downtime and and have a response plan in place for rapid resolution. Additionally, examine any third-party services or plugins you are using to ensure they aren’t conflicting with your server’s operation.