In the digital age, ensuring that a website is properly indexed by search engines is crucial for visibility and traffic. However, indexing issues can arise, often leading to reduced online presence and engagement. By leveraging server logs, website administrators can gain valuable insights into the crawling behavior of search engines, identify errors, and diagnose underlying problems affecting indexing. Server logs provide a comprehensive record of requests made to the server, enabling the pinpointing of issues like broken links, redirect errors, or slow crawling. This article explores how to effectively utilize server logs to troubleshoot and resolve indexing challenges, enhancing a website’s performance.

Understanding the Role of Server Logs in Identifying Indexing Problems

Server logs are a crucial resource for diagnosing indexing issues. They provide detailed insights into how search engines access and interact with your website. By analyzing server logs, you can identify indexing errors, understand search engine behavior, and make informed adjustments to improve your site’s visibility in search results.

What Are Server Logs and Why Are They Important?

Server logs are records maintained by your web server documenting all activities, including every request it handles. These logs capture incoming and outgoing requests, resource access, and the responses given by the server. They are important because they offer an extensive trail of data about how users and bots interact with your website. This helps in diagnosing problems, such as crawl errors by search engines, and optimizing site performance.

Common Indexing Issues Detected Using Server Logs

Some common indexing issues that can be detected through server logs include 404 errors (Page Not Found), which indicate broken links, and 500 errors, which point to server-side problems. Logs can also reveal redirect loops or issues with robots.txt, which can inadvertently block search engines from accessing parts of your site. By regularly analyzing these logs, you can preemptively resolve issues damaging your indexing.

How to Analyze Server Logs for Indexing Errors

To analyze server logs, you typically extract relevant data and use tools or scripts for in-depth examination. Focus on HTTP status codes, which reveal how often pages return errors when crawled, and identify trends in bot traffic, which pinpoint issues specific to search engine crawlers. Techniques such as filtering by date range or specific user-agent can help narrow down problem sources, allowing more precise diagnostics.

Using Server Logs to Improve Crawl Efficiency

By reviewing server logs, you can improve crawl efficiency. Determine which pages are frequently crawled and which ones receive insufficient attention. Optimize your crawl budget by ensuring key pages are readily accessible to search engines and less important resources are suitably deprioritized. This not only cuts down on server costs but also significantly enhances your site’s indexing performance.

Tools for Server Log Analysis

Various tools can be used for server log analysis, from basic command-line utilities to advanced software solutions. AWStats and Webalizer offer comprehensive insights into traffic statistics, while Screaming Frog Log File Analyser provides detailed breakdowns specific to SEO needs. These tools help you automate the log analysis process, saving time and allowing you to focus on implementing SEO improvements.

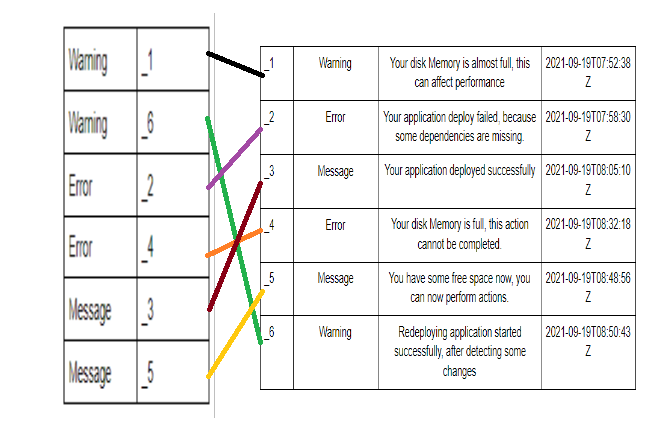

| Common Status Code | Meaning | Resolution Strategy |

|---|---|---|

| 404 | Page Not Found | Redirect or Replace Links |

| 500 | Internal Server Error | Check Server Configuration |

| 301 | Permanently Moved | Ensure Correct Redirect Chains |

| 302 | Temporarily Moved | Evaluate Need for Redirection |

| 403 | Forbidden | Review Permissions |

What is indexing logs?

Indexing logs refers to the process of organizing and storing log data in a way that allows for efficient searching, retrieval, and analysis. When systems generate logs, they produce large volumes of data that can be challenging to sift through manually. By indexing this information, one can quickly and easily locate specific logs or discover patterns in the data, which is particularly useful for troubleshooting, security auditing, and performance monitoring.

Benefits of Indexing Logs

Indexing logs offers several advantages that improve data handling and analysis:

- Efficient Search and Retrieval: Indexing allows for rapid search queries, enabling users to quickly find and retrieve specific logs without manually searching through vast amounts of data.

- Improved Data Organization: With indexing, logs are organized in a structured manner, categorizing them by various parameters such as timestamps, severity, or sources, making it easier to manage and interpret the data.

- Enhanced Analytical Capabilities: Indexed logs are easier to analyze, allowing for in-depth insights and the detection of patterns or anomalies that can inform decision-making and improve operational strategies.

Different Techniques for Indexing Logs

Several techniques can be employed to index logs efficiently:

- Full-Text Indexing: This method involves indexing every word in the logs, making it possible to perform keyword searches swiftly across the entire dataset.

- Field-Specific Indexing: Here, indexing focuses on specific fields or parts of the log entries, such as timestamps or error codes, allowing for targeted queries based on particular criteria.

- Inverted Indexing: This technique creates a mapping from content (like words or phrases) to their locations in the database, thus accelerating search operations.

Common Tools Used for Indexing Logs

There are various tools and platforms commonly used to efficiently index logs:

- Elasticsearch: An open-source search engine known for its speed and scalability, capable of handling large volumes of indexed logs while providing powerful search capabilities.

- Splunk: A robust platform famous for log management, offering extensive features for indexing and analyzing log data in real-time.

- Logstash: Part of the ELK stack (Elasticsearch, Logstash, and Kibana), Logstash processes and transforms log data before sending it to Elasticsearch for indexing.

What to look for in server logs?

Key Indicators of Potential Issues

Understanding what to look for in server logs requires identifying the indicators of potential issues that could impact server performance or security. Here are some key indicators:

- Error Messages: Look for recurring error messages, which might indicate malfunction in application codes or system operations.

- High Latency: Notice any entries showing markedly slow response times, hinting at possible server stress or congestion.

- Service Failures: Identify any records of failed service starts or interactions, which could signal hardware or configuration issues.

Security Concerns and Anomalies

Server logs are crucial for recognizing potential security threats and anomalies. Here are elements to monitor:

- Unauthorized Access Attempts: Frequent failed login attempts could be symptomatic of a brute force attack.

- Anomalous IP Activity: Keep an eye out for unexpected IP addresses attempting to communicate with your server.

- Abnormal User Behavior: Notice if any legitimate user behaves differently, such as accessing data outside of usual working hours.

Traffic Patterns and Performance Metrics

Analyzing server logs can help understand traffic patterns and measure performance metrics critical for service optimization. Here are aspects to consider:

- Traffic Volume: Monitor the amount of incoming and outgoing traffic to plan for scalability and resource allocation.

- Resource Utilization: Evaluate CPU, memory, and disk usage data to identify potential upgrades or optimizations.

- Peak Usage Trends: Identify when the server experiences the highest load to optimize operations and resources accordingly.

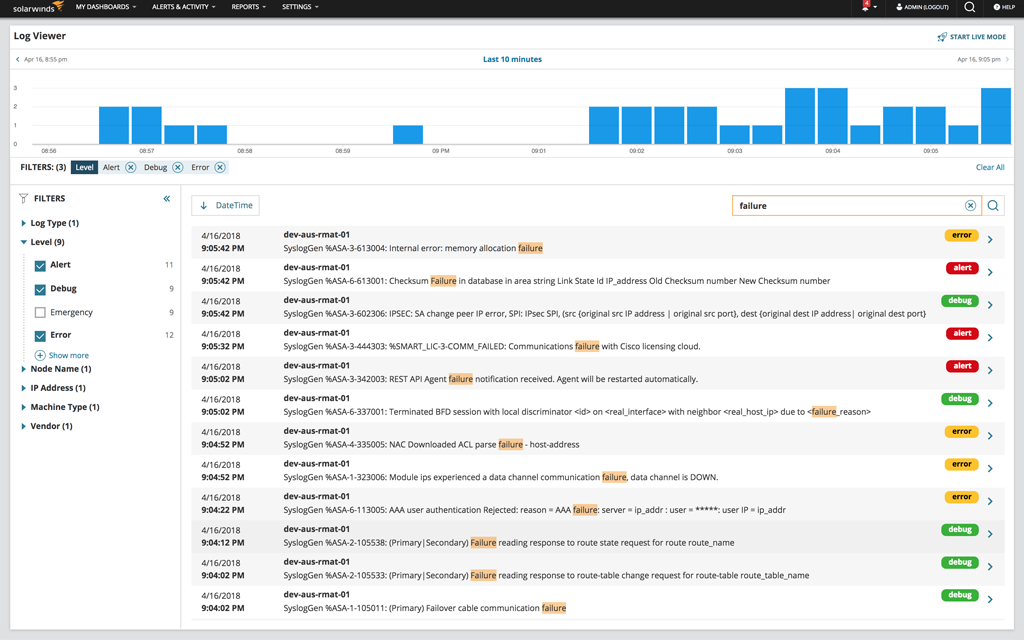

How do I check server logs?

Understanding the Importance of Server Logs

Server logs are critical for diagnosing issues, monitoring performance, and maintaining security. These logs contain detailed records of server activity and can provide insights into various issues that may arise.

1. Error Diagnosis: Logs help in pinpointing where an error occurred and what might have caused it.

2. Performance Monitoring: By examining logs, you can assess how well your server is performing and identify any areas that need improvement.

3. Security Auditing: Logs can reveal unauthorized access attempts and other suspicious activities, contributing to your overall security posture.

Accessing Server Logs on Common Operating Systems

The method to access server logs may vary depending on the operating system your server is running. Here are some typical ways to access logs:

1. Linux Servers: Logs are usually stored in the `/var/log/` directory. For example, Apache logs are typically found in `/var/log/apache2/` or `/var/log/httpd/`.

2. Windows Servers: The Event Viewer is commonly used to check logs. You can access it by searching for Event Viewer in the start menu, and then exploring the different sections such as Application and System logs.

3. MacOS Servers: The Console application can be utilized to view logs. Access this by opening Applications > Utilities > Console.

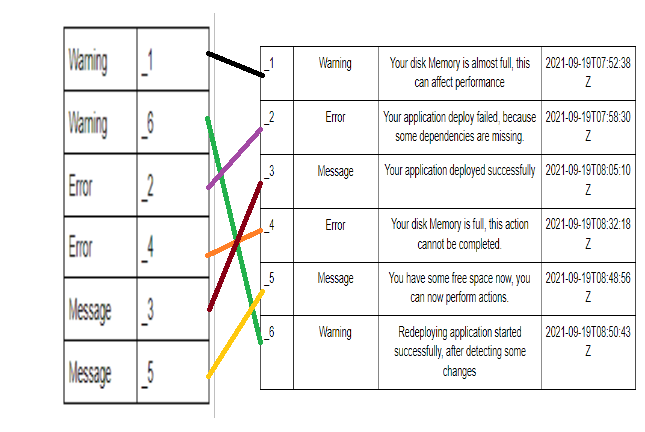

Using Tools to Analyze Server Logs

To effectively analyze and make sense of server logs, various tools can be used to parse and visualize log data, helping you to gain deeper insights.

1. Log Management Systems: Tools like Loggly, Splunk, and ELK Stack can aggregate and examine logs more efficiently.

2. Command-Line Utilities: Utilities such as grep, awk, and tail are powerful for searching and filtering log data in Linux environments.

3. Custom Scripts: Writing scripts in languages like Python or Bash can automate log analysis and generate reports tailored to your specific needs.

Frequently Asked Questions

How can server logs help in diagnosing indexing issues?

Server logs can be crucial in pinpointing indexing issues because they provide detailed records of every request made to a server, including those from search engine crawlers like Googlebot or Bingbot. By analyzing the logs, you can determine whether these crawlers are able to access your site’s pages correctly. Logs can show the status codes returned when crawlers request a page, such as 404 errors for pages not found or 503 errors indicating server overload. This can help identify if certain pages are consistently experiencing problems or if there’s a systemic issue affecting your site’s overall ability to be crawled and indexed. Additionally, server logs can reveal whether robots.txt rules are inadvertently blocking pages from being accessed.

What specific server log entries should I be looking out for?

When examining server logs to diagnose indexing issues, you should focus on entries related to search engine crawlers and note any unusual patterns. Look for HTTP status codes that indicate errors: 404 for page not found, 403 for forbidden access, 500 for internal server errors, and 503 for server unavailability. These errors can signal problems that need immediate attention. Furthermore, check the frequency and scope of crawler activity; if a crawler is not visiting certain pages, it could suggest those pages are not being indexed. Review entries that show lengthy server response times, which might indicate performance issues affecting crawler accessibility. Also, verify that crawl requests align with your robots.txt rules to ensure important pages are not being inadvertently blocked.

How do I interpret the data in server logs to better SEO performance?

Interpreting server logs involves analyzing various elements that can affect SEO performance. First, ensure that specific URLs return the expected status codes to crawlers, indicating healthy access. If certain pages have frequent 4xx or 5xx errors, it indicates a problem that requires resolution. Look at server response times; high times can deter crawlers, leading to potentially poor indexing and thus require optimizing server performance. Assess the crawl budget by checking the frequency of crawler requests—imbalances might require site adjustments or content prioritization. Trend analysis over time is useful for identifying persistent issues or improvements, helping you adapt strategies accordingly. With comprehensive log analysis, you can identify broken links, ensure all site elements are accessible, and achieve more efficient crawling from search engines, ultimately enhancing SEO.

What tools are available for analyzing server logs for indexing issues?

Several tools are available to assist in analyzing server logs, each offering unique capabilities to uncover indexing issues. Splunk and Loggly are highly regarded solutions that offer robust data processing and visualization, making it easier to track crawler activity and error codes. Screaming Frog Log File Analyser specifically caters to SEO needs, providing detailed insights into how crawlers interact with your website. Google Search Console can complement log analysis by identifying issues directly from Google’s perspective. For those who prefer open-source options, GoAccess and AWStats offer comprehensive log analysis capabilities without a hefty price tag. These tools facilitate a deeper understanding of accessibility, crawlability, and server response issues, empowering users to make informed decisions to optimize site indexing.