In the sprawling digital landscape, visibility is key. Websites aim to ensure that their content reaches the widest possible audience, with search engine indexing playing a crucial role. However, an overlooked component within this equation is often the humble robots.txt file. This small yet powerful directive guides search engine crawlers on what can and can’t be indexed on your website. Misconfigurations or unintentional blockages within your robots.txt file could inadvertently hinder your site’s visibility, preventing valuable content from appearing in search results. Understanding and optimizing your robots.txt file is therefore imperative for maximizing your site’s search engine performance.

Common Reasons Your Robots.txt File Is Hindering Indexing

When it comes to online visibility, ensuring that your website is effectively indexed by search engine crawlers is vital. The Robots.txt file serves as a useful tool for managing which parts of your site are accessible to search engines. However, a misconfigured file can inadvertently block important sections from being indexed, affecting your site’s visibility.

Understanding the Role of Robots.txt in Web Crawling

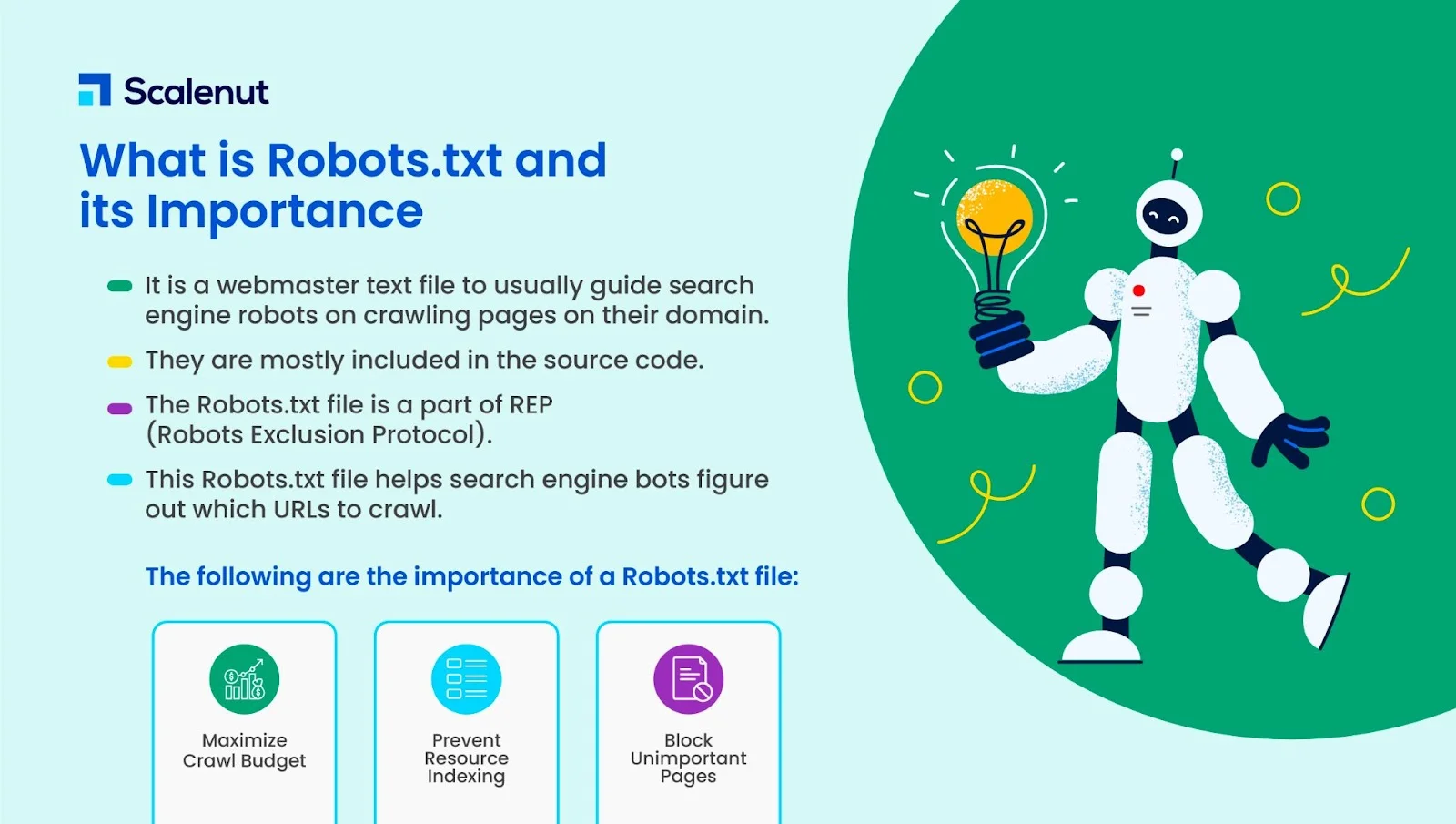

The Robots.txt file is a simple text file located at the root of your website, designed to instruct web crawlers on which pages they are allowed to index. By specifying disallow directives, you can control the portions of your site that should be private or restricted from search engines. However, improper configurations or misunderstandings about its purpose can lead to unwanted blocking of critical content, impacting your SEO performance.

Incorrect Use of Disallow Directives

One of the most common issues is the incorrect employment of the disallow directive. If you inadvertently apply this directive to entire sections or directories of your site, you might find that crucial pages are not being indexed. For example, a line reading Disallow: / blocks the entire website from being indexed. It’s essential to review and double-check these directives to ensure that important content remains accessible to search engines.

Failure to Update Robots.txt After Site Changes

Sites frequently undergo restructuring and updates, which can change the structure of URLs or directories. Failing to update the Robots.txt file to reflect these changes can result in outdated directives continuing to block new or moved content. Regular audits of this file are necessary to align it with the current architecture of your site and ensure that the correct pages are being indexed.

Issues with Comment Misplacements and Formatting Errors

Formatting errors and misplaced comments in the Robots.txt file can cause misinterpretation of your intentions. Lines in the file should be clear and concise, as excess information or typos can result in entire sections of the site being incorrectly indexed. Ensure that comments are clearly separated from directives and that the file follows proper syntax.

Testing and Verifying Your Robots.txt File

Using tools to test and verify the configuration of your Robots.txt file is crucial. There are several online tools and services provided by search engines like Google Search Console that allow you to input your Robots.txt file and see how it’s being interpreted. Regular testing can help catch errors and ensure that your file is correctly directing crawlers.

| Issue | Impact | Solution |

|---|---|---|

| Incorrect Disallow Directives | Important content might be blocked from indexing | Review and adjust directives to ensure key content is accessible |

| Outdated File After Site Changes | New or moved content may not get indexed | Update Robots.txt to reflect current site structure |

| Formatting Errors | Misinterpretation leading to improper indexing | Check for syntax errors and correct comment placements |

| Lack of Testing and Verification | Overlooked errors may persist, affecting visibility | Regularly use tools to test and validate Robots.txt settings |

Does robots.txt prevent indexing?

Understanding the Function of robots.txt in Search Engines

The robots.txt file serves as a guide for web crawlers, instructing them on how to navigate a website’s pages. It is essential to understand that while the main function of the robots.txt file is to control crawling, it does not prevent indexing by itself.

- Crawling Control: The primary purpose of robots.txt is to inform search engines about which parts of a website should not be crawled. If a URL is disallowed in robots.txt, search engines will avoid crawling that page, assuming they comply with the directive.

- Indirect Indexing Prevention: If a page is not crawled, it is less likely to be indexed because search engines do not have its content to analyze. However, if a page is linked from external sites, it can still be indexed even if it is disallowed in robots.txt.

- Misconceptions: Some believe that robots.txt can completely block indexing, which is not accurate. To effectively prevent pages from being indexed, utilizing other methods such as noindex meta tags or password protection is required when the page is accessible.

How Can Indexing Be Avoided Successfully?

To effectively manage indexing beyond the capabilities of the robots.txt file, several strategies can be adopted to ensure comprehensive control over what content appears in search engine indexes.

- Meta Noindex Tags: Implementing a ‘noindex’ meta tag within the HTML of a web page instructs search engines to not index the page. Even if the page is crawled, the inclusion of this tag will prevent it from appearing in search results.

- Password Protection: Securing pages through authentication methods ensures they are inaccessible to crawlers. As a result, these pages are neither crawled nor indexed unless they are publicly linked.

- Search Console Removal Tool: Using tools available in Google Search Console to remove content from the index provides an immediate method for managing indexed pages.

Common Misconceptions About robots.txt and Indexing

Misunderstanding the role of the robots.txt file can lead to mismanagement of a site’s visibility in search engines. There are several common misconceptions about its functionality.

- Complete Blockage: Some assume that by disallowing a page in robots.txt, it completely prevents indexing. However, external links pointing to those pages can still lead to them being indexed.

- Security Misbelief: Relying solely on robots.txt for preventing sensitive information from being accessible can be risky, as its directives are not a means of limiting access or providing security.

- X-Robots-Tag Header: This alternative offers more flexible control over indexing. Unlike robots.txt, it can be used in HTTP headers to specify indexing and crawling instructions for multimedia files or other resources.

How to fix robots.txt problem?

Fixing a robots.txt file issue involves several steps to ensure that your site is correctly guiding search engine crawlers about which pages should and should not be crawled. Here are some details on how to address common problems:

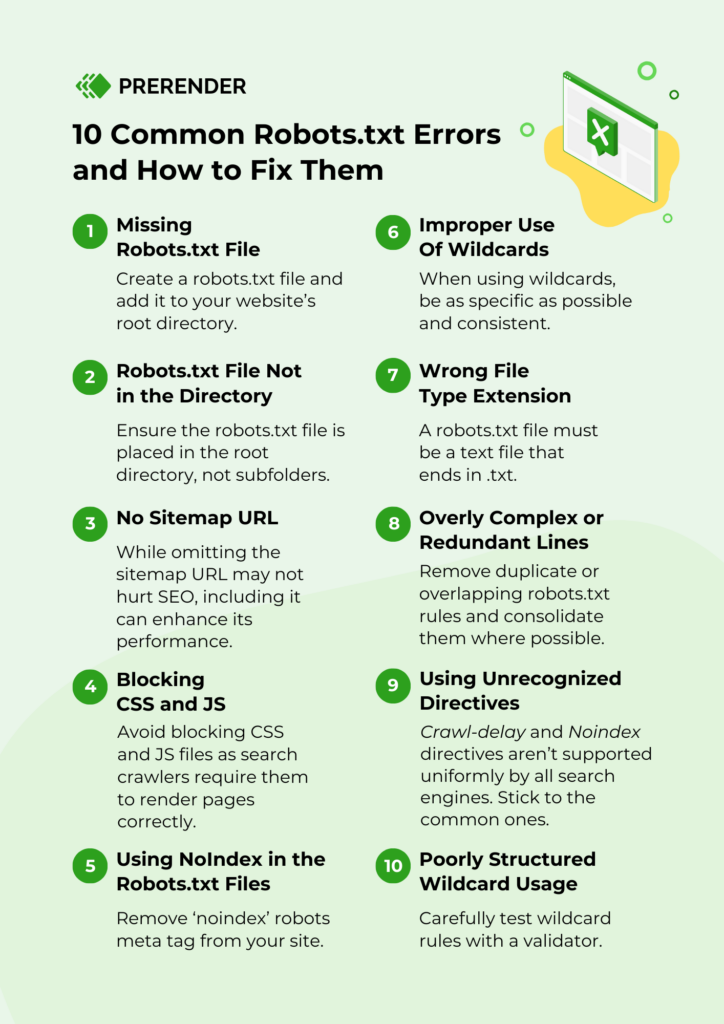

Understanding Common Robots.txt Errors

Understanding the common errors within a robots.txt file is crucial in resolving them effectively. Here’s a breakdown of typical issues:

1. Syntax Errors – The format of a robots.txt file is crucial. Ensure that the file follows the correct syntax and indentation.

2. Blockage of Important Pages – Often, pages that should be indexed by search engines are accidentally blocked. Double-check disallowed paths.

3. File Accessibility Issues – Verify that the robots.txt file is accessible from the root domain (example.com/robots.txt).

Steps to Resolve Robots.txt Problems

Addressing these problems can be systematic. Here’s a step-by-step approach:

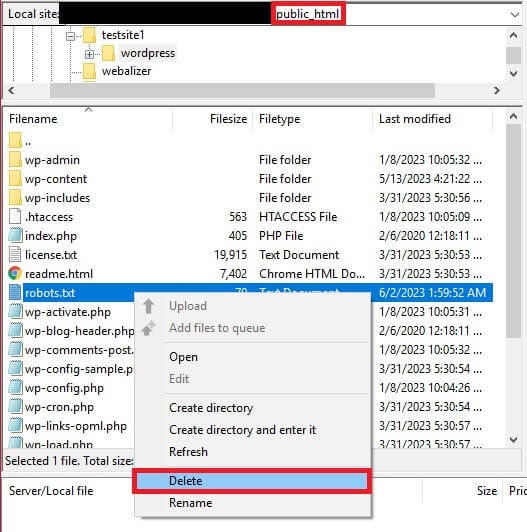

1. Validate the Current Robots.txt – Use tools like Google’s Robots.txt Tester to identify errors in your file.

2. Edit Your Robots.txt – Modify or create a new robots.txt file and include correct directives. Ensure you have lines beginning with User-agent followed by the crawler name, and lines Disallow or Allow to control access.

3. Upload and Test – Save and upload the corrected file to your site’s root directory. Test again using online validators.

Best Practices for Creating a Robots.txt File

Applying best practices in creating and maintaining a robots.txt file can preempt problems:

1. Keep It Simple – Avoid overly complex rules that might confuse or block important content.

2. Regularly Review – Websites evolve, so regularly check your rules to ensure they align with your current SEO strategy.

3. Use Wildcards and Dollar Signs Judiciously – Employ these to handle patterns or delimit URLs but do so with care to avoid unintended blockage.

How to remove robots.txt block?

Understanding the Purpose of Robots.txt

To effectively remove a block created by robots.txt, it’s crucial to first comprehend its purpose. This file is used to manage crawler access to different parts of a website. It’s typically used to:

- Control Access: Allow or disallow web crawlers from accessing certain directories on a site.

- Guide Crawlers: Direct crawlers towards specific pages that you want to have indexed.

- Optimize Crawl Rates: Help search engines focus on high-priority pages by limiting access to less important ones.

Steps to Modify the Robots.txt File

If you’ve decided that certain blocks are unnecessary, you can modify the robots.txt file by following these steps:

- Access the File: Use an FTP client or hosting service panel to navigate to the root of your website’s directory where the robots.txt file is located.

- Edit the File: Open the file in a text editor and locate the user-agent or specific sections that’s blocking the pages you want visible to search engines.

- Change Disallow to Allow: Replace any Disallow lines with Allow or simply remove specific rules to grant crawlers access.

Verifying Changes and Ensuring Proper Functionality

Once the robots.txt file has been edited, it’s important to confirm that changes are effective and that the site maintains proper functionality:

- Validate File Syntax: Use online robots.txt validators to ensure there are no syntax errors that could impact your site’s access controls.

- Test Using Webmaster Tools: Leverage tools like Google Search Console to test the accessibility of the affected pages and see how Google interprets the updated robots.txt file.

- Monitor Crawler Access: Keep an eye on crawl reports in webmaster tools to observe changes in how search engines are accessing and indexing your site.

How to fix blocked by robots.txt in Shopify?

If you are encountering issues with being blocked by robots.txt on Shopify, you might be facing limitations where search engines are prevented from crawling certain areas of your site. Here’s how you can address this issue effectively:

Understanding Shopify’s Robots.txt

Shopify automatically generates a robots.txt file for each store, which by default restricts access to some areas.

– Default Configuration: Shopify sets up its robots.txt to block access to certain resources like the checkout page, shopping cart, and search result pages to improve SEO performance.

– Accessing Robots.txt: To view your current robots.txt file, append `/robots.txt` to your store’s domain URL in a web browser.

– Why It’s Important: The robots.txt file is crucial for ensuring that search engines index the appropriate portions of your site, enhancing your store’s visibility.

Modifying the Robots.txt File on Shopify

Though customization was not offered historically, Shopify now allows more flexibility with modifying the robots.txt file via their admin interface.

– Access Through Admin Interface: Go to your Shopify admin, navigate to Online Store, and then to Preferences where the robots.txt file can be edited with Liquid.

– Make Precise Changes: Use the Shopify admin to make specific changes like allowing or blocking specific bots if necessary.

– Confirming Changes: After making changes, you can review the updated robots.txt file similarly by visiting your domain with `/robots.txt`.

Best Practices to Optimize Your Robots.txt

To ensure your robots.txt is optimally configured, follow these guidelines:

– Keep Essential Pages Accessible: Make sure product pages, collections, and blog content are not blocked by the robots.txt file.

– Temporary Blocks: If you must block content, ensure it is temporary and monitor the impact on search engine rankings.

– Consult with SEO Experts: Always consider getting feedback from an SEO expert to ensure your robots.txt file aligns with your SEO strategy and visibility goals.

Frequently Asked Questions

What is the purpose of a robots.txt file, and how does it affect indexing?

The robots.txt file is a crucial part of website management, used to instruct search engine crawlers on how they can interact with and index a site’s pages. Its primary purpose is to guide web crawlers, ensuring that sensitive or irrelevant data is not indexed, which helps control the online visibility of content. By specifying which parts of a website should not be crawled, you prevent indexation of duplicate content, internal search results, or private parts of a site. However, incorrect configurations in the robots.txt file can accidentally block crucial pages from being indexed, resulting in significant drops in search engine visibility and potential traffic loss. Therefore, it is essential to regularly review and test this file to ensure it aligns with your current SEO strategy and objectives.

How can a disallow directive in robots.txt block indexing of important pages?

A disallow directive in the robots.txt file explicitly instructs search engine bots not to visit certain parts of a website. This directive can inadvertently block important pages from being indexed if not carefully applied. For instance, employing a generic disallow for a directory that contains both irrelevant and vital content could lead to the exclusion of all its contents from search engine results. It is important to remember that once a page is disallowed in the robots.txt, search engines typically won’t index the page at all, making it invisible in their search results. To prevent this, it is crucial to correctly specify the directories or individual files that should be excluded, double-checking the syntax and ensuring no essential content is unintentionally barred.

What are the consequences of unintentionally blocking search engine robots from indexing certain pages?

If a robots.txt file mistakenly blocks search engines from indexing pivotal pages, the consequences can be dire for a website’s search engine optimization (SEO). Such errors can lead to a loss of organic traffic, as important pages such as product listings, service descriptions, or informational articles might not appear in search engine results. This situation can significantly reduce the site’s online visibility and authority, directly impacting conversions and lead generation. Moreover, for e-commerce sites, blocking essential pages can lead to a decrease in sales and user engagement. Regularly auditing the robots.txt file is essential to ensure that no economically or strategically important content is inadvertently blocked from indexing.

How can you audit a robots.txt file to ensure it’s not blocking necessary content?

To effectively audit a robots.txt file, start by reviewing the entire content systematically to ensure that all directives align with your site’s goals and SEO strategy. Employ tools like Google’s Search Console to identify any coverage issues indicating blocked URLs. Additionally, use crawler simulators or spider tools to verify how search engines interpret your robots.txt file. This will highlight any blocked URLs that should be indexed. Regularly updating and testing robots.txt settings can help prevent new blocks from arising due to website changes or added URLs. Pay attention to the syntax, especially the placement of ‘Disallow’ and ‘Allow’ directives, to avoid conflicts that can lead to blocking necessary content unintentionally.